The American Society on Aging in 2024 hosted the first AI Summit in the OnTech conference, as part of the annual On Aging conference. Co-authors and Journal Guest Editors Dan Andersen and Faizan Wajid, members of the Explore Digits team, were honored to serve on a panel that discussed how AI is transforming aging and aging services. The topic of that presentation was Ageism in the Era of Generative Artificial Intelligence. During that presentation, the authors provided a live demonstration to showcase some potential challenges of ever-evolving AI tools, with a focus on image generation. In this article, we revisit that topic by providing background information on generative AI, showing examples of ageism in image generation, and identifying steps the aging sector can take to combat this issue.

Machine Learning and Generative AI

Machine Learning (ML) and Generative AI (GenAI) are subsets of Artificial Intelligence (AI) that enable computers to learn from data, identify patterns, and make decisions with minimal human intervention. GenAI, specifically, focuses on generating new content such as images, text, and even music or videos by learning from vast amounts of data to create new outputs for given tasks by users.

Ethics and Bias in AI

The role of AI in society has expanded significantly, influencing everything from search engines to recommender systems. However, this rise brings challenges, particularly in terms of bias—the tendency for AI systems to favor certain outcomes over others. This isn’t typically because of explicit programming but rather due to the data used for training. For instance, facial recognition technologies have struggled with accurately identifying individuals from minority groups due to the lack of minority representation in training data. These types of problems can manifest in many ways.

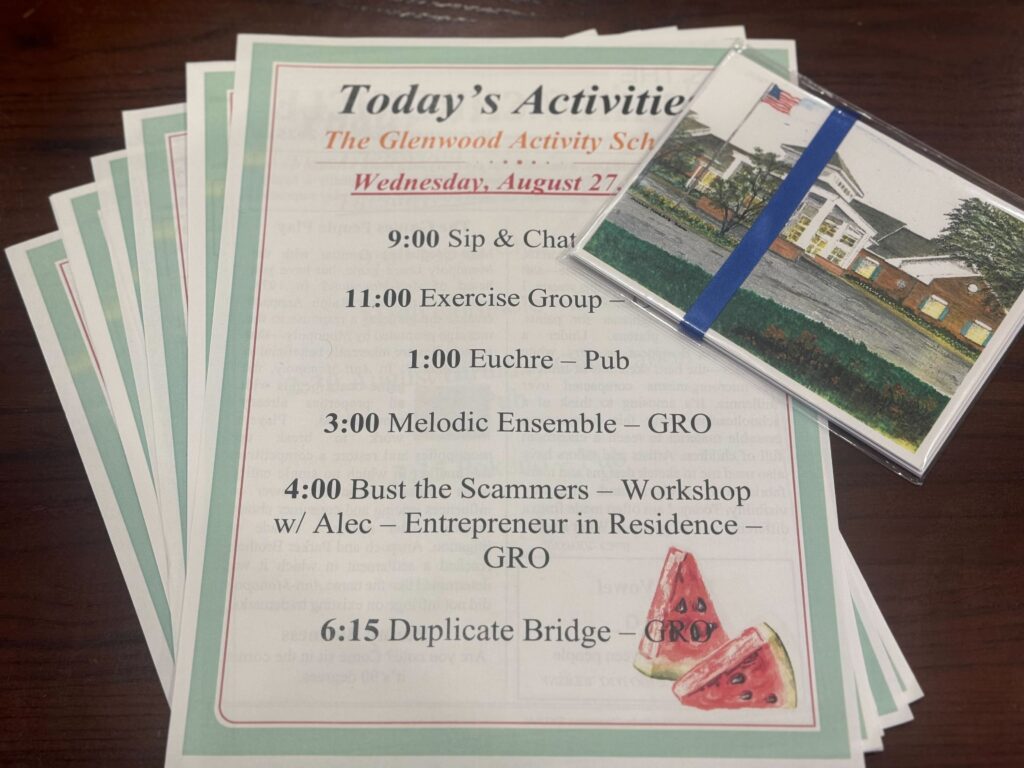

ML model training is often completed using labeled data—that is, data that has been described or approved by humans so that the ML can be shaped to provide the desired outputs. Here is where some of the challenges come in that can create age bias in ML model outputs. Below, we provide a few examples of stock photos available on the internet with their captions that can create biases in the model outputs.

In this image, the caption or alternative text reads, “elderly women gather in a room with colorful umbrellas, surrounded by decorative curtains, while being accompanied by caregivers or staff members. Umbrellas display various colors and patterns among the participants.” There is no indication that this would appear to be an institutional setting, such as a nursing home, or that at least two of the women are using assistive devices for mobility.

The caption for the photo below reads, “older person walking.” Note there is no mention of the rollator.

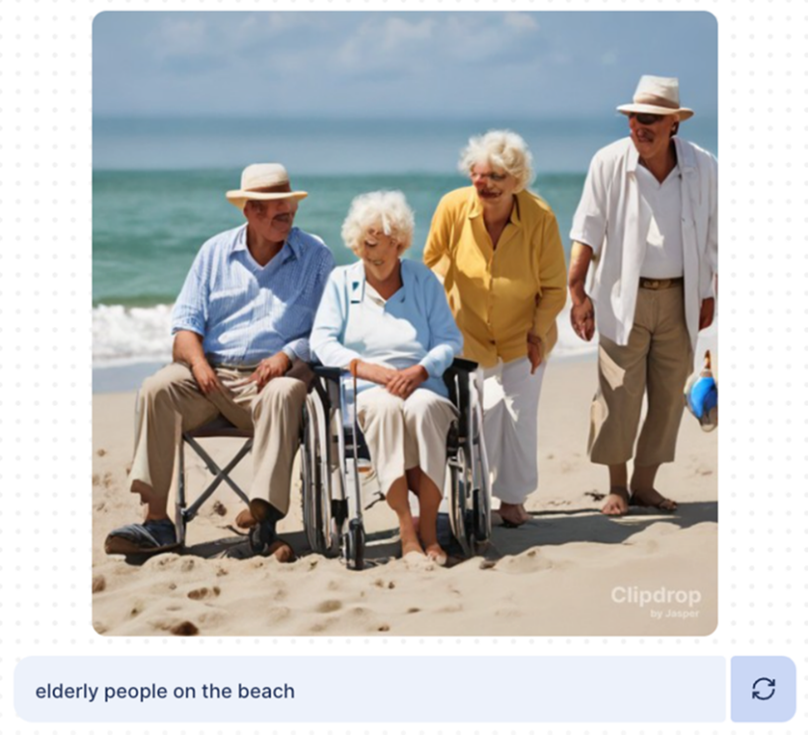

Older adults in images on the internet are often portrayed with walkers, canes, rollators, or in a wheelchair.

Ageism: A Hidden Bias in AI

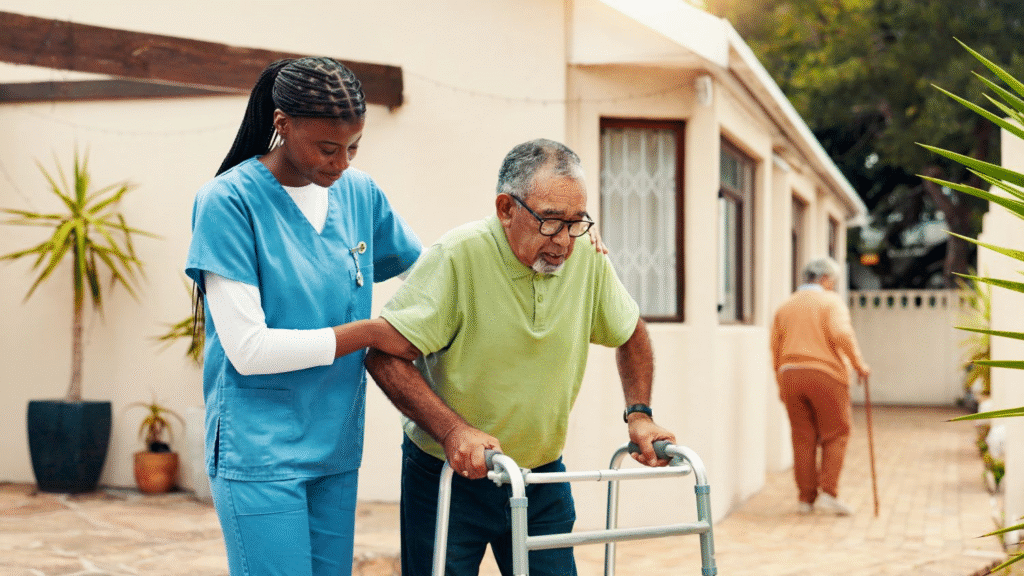

Ageism in AI, especially in image generation, manifests as stereotypes and biases against older adults. AI models often underrepresent older people, which leads to less accurate or biased outputs. This is exemplified in how older adults are frequently depicted as disabled, frail, or uniformly white in generated images. These stereotypes can perpetuate societal biases and inaccuracies, showing a clear need for a more balanced representation.

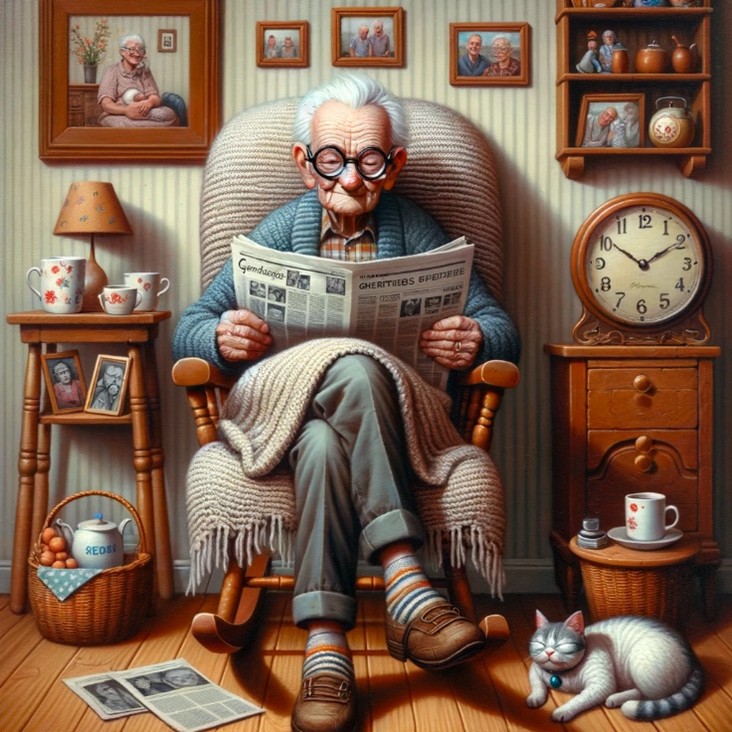

In the examples below we provide images generated by “prompts,” or plain language requests for images based on a user’s requirements. In some cases, we use terminology that is “age friendly” and in line with the spirit of how we should reframe aging. In other cases, we use terminology a lay audience might more likely use. What you’ll see is that, in most cases, the generative AI tools will portray older adults in biased and unflattering ways.

Image 1. Prompt is “image of older people.” Notice the lack of diversity:

Image 2. “Painting of a stereotypical older adult.”

Image 3. “Photo realistic drawing of an elderly woman.”

Image 4. “Another one please.”

Image 5. “Elderly people on the beach.”

Combating Ageism in AI

Addressing ageism in AI involves recognizing the existence of the problem and educating the public about the importance of identifying, reducing, and eliminating biases. A key strategy is to diversify training data to ensure it reflects true diversity in age, ethnicity, and more. This includes involving stakeholders from various age groups and underrepresented populations in data curation and in the AI design process. Continuously monitoring AI systems for biases and enforcing ethical guidelines that prioritize fairness, transparency, and accountability are crucial steps toward attaining responsible AI.

Conclusion

Integrating ethical considerations into the development of AI technologies is crucial. As AI continues to transform various aspects of society, the commitment to ethical development, diversity, and continuous improvement will ensure that technology not only advances but does so in a way that promotes inclusivity and addresses biases like ageism for the benefit of all.

Dan Andersen, PhD, is chief health informatics officer at Explore Digits in Rockville, Md. Previously he directed Healthcare Analytics Services for the RELI Group, Inc., was a principal for Andersen Home Advantage, adjunct faculty and program coordinator for McDaniel College, a program manager for Lantana Consulting Group, a principal research associate for IMPAQ International, adjunct faculty at University of Maryland Baltimore, and a health insurance specialist/research analyst for the Centers for Medicare & Medicaid Services.

Vishesh Gupta is a Computer Science and Mathematics undergraduate at the University of Maryland, specializing in NLP and LLM. He is an AI/ML intern at Explore Digits, contributing to the research and development of AI-driven tools and products.

Nikhil Nirhale, MS, is lead data scientist at Explore Digits, Inc. Previously he was lead data scientist on CCSQ FAS and a product engineer for the Feedback Analysis System.

Faizan Wajid, PhD, is a senior data and research scientist at Explore Digits, where he develops AI-driven tools to support healthcare providers, from policymakers to caregivers. His research interests include sensors and signal analysis using wearable technologies, as well as LLM.

Photo credit: Shutterstock/Jirsak